Can you decipher the digital hieroglyphs that sometimes appear when the world of computers miscommunicates? The cryptic symbols and distorted characters are a common plight, but understanding their origins is the first step towards reclaiming the clarity of the written word.

The digital realm, a universe of ones and zeros, relies on a complex system of encoding to represent the vast array of characters we use every day. When these systems falter, the result can be a frustrating jumble of symbols, a digital language barrier that prevents us from accessing the information we seek. From the subtle nuances of diacritics in Polish to the complex characters of Chinese, the accurate representation of text is essential for effective communication. Whether it's a document riddled with question marks where accents should be or a website displaying a series of seemingly random characters, the cause of these issues can usually be traced back to problems with character encoding. This article will delve into the intricacies of character encoding, providing a comprehensive guide to understanding and resolving these common digital dilemmas.

Before we can understand how text goes wrong, we must first understand how it's supposed to go right. The foundation of digital text lies in character encoding, a system that maps characters—letters, numbers, symbols, and more—to numerical values. The computer then uses these numerical values to store, transmit, and display text. Several different encoding schemes exist, and the choice of which one to use is critical. Each scheme has its own set of supported characters and its own method of representing them. The most common encoding schemes include ASCII, ISO-8859-1, and UTF-8. ASCII (American Standard Code for Information Interchange) is the oldest and simplest. It defines 128 characters, including the English alphabet, numbers, and basic punctuation. ISO-8859-1, also known as Latin-1, is an extension of ASCII that supports a wider range of characters, including those with diacritics commonly used in Western European languages. UTF-8 (Unicode Transformation Format - 8-bit) is a more modern and versatile encoding scheme. It is designed to support all the characters used in all the world's languages. UTF-8 is the most widely used character encoding on the internet today, due to its ability to represent virtually any character.

The Polish alphabet, for example, presents a unique set of challenges in character encoding. Composed of 32 letters, it utilizes the Latin script but incorporates diacritics to represent specific Polish phonemes. The accurate rendering of these special characters, such as ą and ł, is crucial for the proper understanding of Polish text. When encoding is incorrect, these characters are often displayed incorrectly, hindering the communication of Polish speakers and learners.

The table below illustrates the complexities of character encoding, focusing on the individual, and how different elements contribute to the success of their chosen career path:

| Category | Details |

|---|---|

| Name | (Fictional Example: Anya Volkov) |

| Personal Information | Age: 35; Nationality: Russian-American; Residence: New York City |

| Education | Master of Science in Computer Science, Stanford University; Bachelor of Arts in Linguistics, Moscow State University. |

| Career Path | Software Engineer; AI Researcher; Language Technology Specialist. |

| Professional Achievements | Lead developer of a multilingual text processing tool; Published several research papers on natural language processing; Awarded a grant for innovative work in cross-lingual information retrieval. |

| Skills | Proficient in multiple programming languages (Python, Java, C++); Expert in NLP and machine learning; Knowledge of various character encoding systems. |

| Experience | Senior Software Engineer at a leading tech company; Research Scientist at a prestigious AI lab. |

| Website Reference | Example Profile |

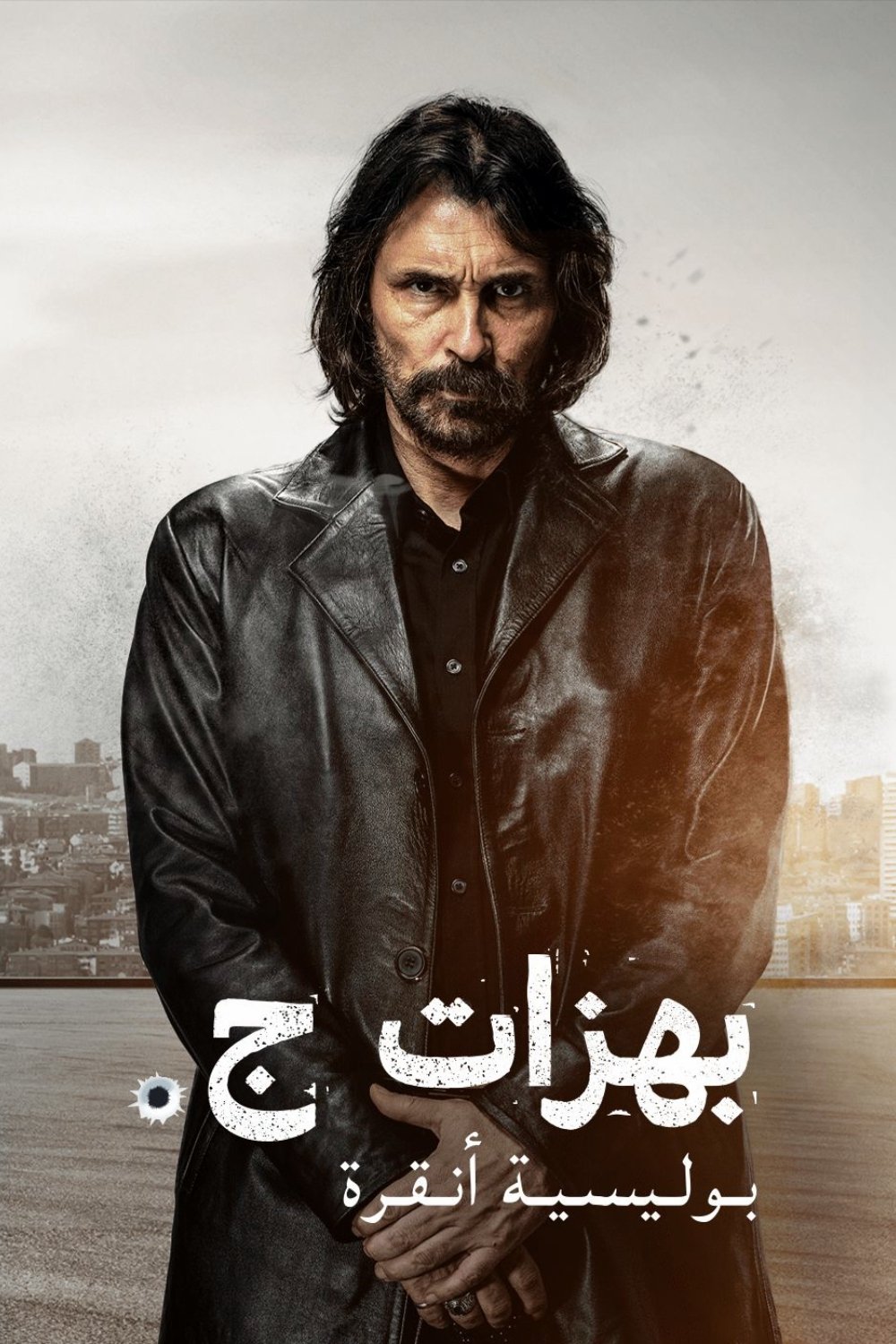

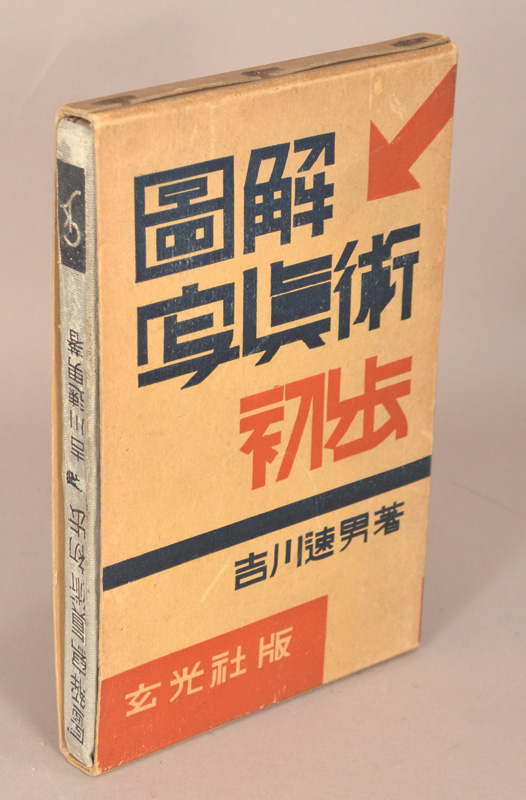

One of the most pervasive encoding challenges involves the complexities of languages like Chinese. When Chinese characters are not rendered correctly, the text becomes unreadable, leading to a complete loss of information. The issue may arise from a mismatch in the character set, the use of an incompatible encoding, or a failure to account for the unique structure of the Chinese language.

Let's consider the seemingly random sequence of characters that often appear when encoding issues arise, such as: 具有éœé›»ç”¢çŸè£ç½®ä¹‹å½±åƒè¼¸å…¥è£ç½®. This jumble, if the encoding is incorrect, is actually an attempt to represent Chinese characters. If the system expects a different encoding, the result is a display of meaningless characters. A key factor in fixing this is identifying the proper character set the text was intended to use. Then the correct encoding is applied, and the text can be viewed correctly. In such cases, understanding and identifying the correct character set is paramount.

HTML (HyperText Markup Language) plays a pivotal role in defining character encoding for web pages. Through the `` tag, developers can specify the character encoding used for their content, ensuring that the browser correctly interprets and displays the text. The attribute `http-equiv=Content-Type` is used to set the content type of the HTML document, including the character encoding. For example, the tag `` explicitly tells the browser to use UTF-8 encoding.

The W3Schools provides information on how to use HTML and character encoding, detailing how the correct encoding leads to accurate text representation. An essential part of correct display involves the use of the correct entities for special characters. The following table shows how certain special characters are rendered in HTML:

ç: 231: 00e7 ç latin small letter c with cedilla

è: 232: 00e8 è latin small letter e with grave

é: 233: 00e9 é latin small letter e with acute

ê: 234: 00ea ê latin small letter e with circumflex

ë: 235: 00eb ë latin small letter e with diaeresis

ì: 236: 00ec ì latin small letter i with grave

í: 237: 00ed í latin small letter i with acute

The PHP programming language offers tools to handle character encoding conversions. For example, the `iconv` function can convert text between different character encodings. However, the PHP Manual notes that `iconv` may not function as expected on all systems. As a result, it is prudent to install the GNU libiconv library for maximum compatibility.

For those facing character encoding issues, particularly when dealing with garbled text, online tools such as 在线乱码恢复 (Online Garbled Code Recovery) can be invaluable. By inputting the garbled text, these tools attempt to identify the correct encoding and convert the text back to its original form. These tools often support a variety of encodings, including UTF-8, GBK, and Big5, making them useful for recovering text from diverse sources.

The Unicode standard provides a comprehensive set of characters and codes, including a range of special characters and symbols. Here's a snippet from the Unicode character table:

U+FF00 ï¼

U+FF01 ! ï¼ FULLWIDTH EXCLAMATION MARK

U+FF02 " ï¼ FULLWIDTH QUOTATION MARK

In this table, the first column shows the Unicode code point, the second column contains the character itself, and the third indicates the UTF-8 encoding. Understanding this table can help one discern the intended characters, even when encountering encoding problems. For instance, the correct representation of the FULLWIDTH EXCLAMATION MARK is ensured via its proper encoding.

To prevent character encoding issues, developers should employ best practices, such as consistently using UTF-8 encoding. When creating web pages, specifying the correct character set in the `` tag in the HTML header is crucial. Additionally, use text editors and IDEs that support UTF-8 by default. Ensuring data sources and databases also use the correct encoding. Regular testing and validation of text rendering can assist in the early detection of issues.

Debugging character encoding problems may involve several steps. Identifying the source of the text is an important step. Determine the encoding used by that source. Next, examine the output, and identify the incorrect characters. If the text is a web page, inspect the HTML source code, and ensure the correct character set is declared. Use online tools or programming libraries to attempt encoding conversions. Reviewing system configurations and software settings is also important.

The problem of character encoding may seem complicated. However, by learning the underlying concepts and employing best practices, one can reduce the frequency of errors and find the right solution.